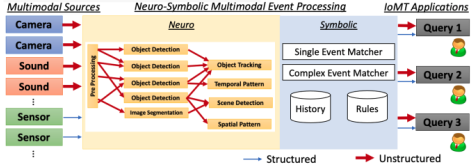

MEP provides a formal basis for native approaches to multimodal content analysis (e.g., computer vision, linguistics, and audio) within the event processing paradigm to support real-time queries over multimodal data streams. MEP enables the user to process multimodal streams under the event-processing paradigm and simplifies the querying of multimodal event streams. Within the MEP paradigm, multimodal streams are processed to generate a knowledge graph representation which can then be queried using the Multimodal Event Processing Language (MEPL). MEPL supports user-defined event operators, which can be developed using Neuro Symbolic based hybrid approach such as statistical DNN models and symbolically based spatial and temporal reasoning. MEP Engines can then be implemented using hybrid processing, combining neural (DNNs) and symbolic (rules) models and the deployment and optimisation of multiple pipelines as illustrated in the figure.